sq

.wrangle | .data

sq is a free/libre open-source

data wrangling swiss-army knife

to inspect, query, join, import, and export data. You could think of sq

as jq

for databases and documents, facilitating one-liners

like:

sq '@postgres_db | .actor | .first_name, .last_name | .[0:5]'

Installation

brew install sq/bin/sh -c "$(curl -fsSL https://sq.io/install.sh)"scoop bucket add sq https://github.com/neilotoole/sq

scoop install sqInstall options for apt, yum, apk, pacman, yay over here

.

For help, sq help is your starting point. And then see the docs

.

Let’s get this out of the way

sq is pronounced like seek. Its query language, SLQ, is pronounced like sleek.

Feature Highlights

Some feature highlights are shown below. For more, see the docs , including the query guide , tutorial and cookbook .

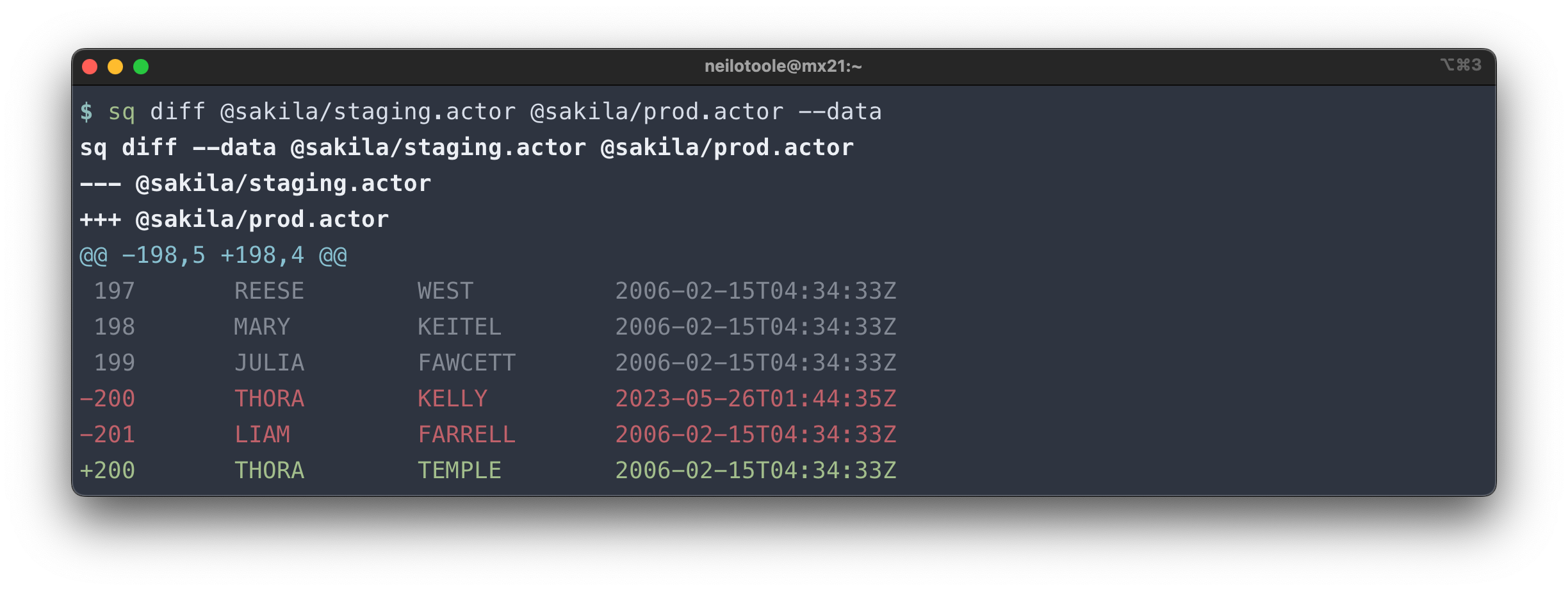

Diff database tables

Use the diff command to compare source metadata or row values.

Import Excel worksheet into Postgres table

Insert

the contents of an Excel XLSX worksheet (from a sheet named actor) into

a new Postgres table named xl_actor. Note that the import mechanism

is reasonably sophisticated in that it tries to preserve data types.

View metadata for a database

The --json flag to sq inspect

outputs schema and other metadata in JSON.

Typically the output is piped to jq to select the interesting elements.

Get names of all columns in a MySQL table

Even easier, just get the metadata for the table you want:

sq inspect @sakila_my.actor -j | jq -r '.columns[] | .name'

Execute SQL query against SQL Server, insert results to SQLite

This snippet adds a (pre-existing) SQL Server source, and creates a new SQLite source. Then, a raw native SQL query is executed against SQL Server , and the results are inserted into SQLite.

Export all database tables to CSV

Get the (JSON) metadata for the active source; pipe that JSON to jq and

extract the table names; pipe the table names

to xargs, invoking sq once for each table, outputting a CSV file per table. This snippet

was tested on macOS.

If you instead wanted to use sql mode:

sq inspect -j | jq -r '.tables[] | .name' | xargs -I % sq sql 'SELECT * FROM %' --csv --output %.csv

Source commands

Commands to add , activate , move , list , group , ping or remove sources.

$ sq src # show active source

$ sq add ./actor.tsv # add a source

$ sq src @actor_tsv # set active source

$ sq ls # list sources

$ sq ls -v # list sources (verbose)

$ sq group # get active group

$ sq group prod # set active group

$ sq mv @sales @prod/sales # rename a source

$ sq ping --all # ping all sources

$ sq rm @actor_tsv # remove a source

Database table commands

Convenient commands that act on database tables: copy , truncate , drop .

Note that sq tbl copy only applies within a single database.

If you want to copy a table from one database to another,

use the --insert

mechanism.

$ sq tbl copy .actor .actor2 # copy table "actor" to "actor2", creating if necessary

$ sq tbl truncate .actor2 # truncate table "actor2"

$ sq tbl drop .actor2 # drop table "actor2"

Query JSONL (e.g. log files)

JSONL

output is a row of JSON per line (hence “JSON Lines”). Lots of log output is like this.

We can use sq’s own log

output as an example:

{"level":"debug","time":"00:07:48.799992","caller":"sqlserver/sqlserver.go:452:(*database).Close","msg":"Close database: @sakila_mssql | sqlserver | sqlserver://sakila:xxxxx@localhost?database=sakila"}

{"level":"debug","time":"00:07:48.800016","caller":"source/files.go:323:(*Files).Close","msg":"Files.Close invoked: has 1 clean funcs"}

{"level":"debug","time":"00:07:48.800031","caller":"source/files.go:61:NewFiles.func1","msg":"About to clean fscache from dir: /var/folders/68/qthwmfm93zl4mqdw_7wvsv7w0000gn/T/sq_files_fscache_2273841732"}